Space Pool

Placement with Two Big Ears, Summer 2014

Project overview

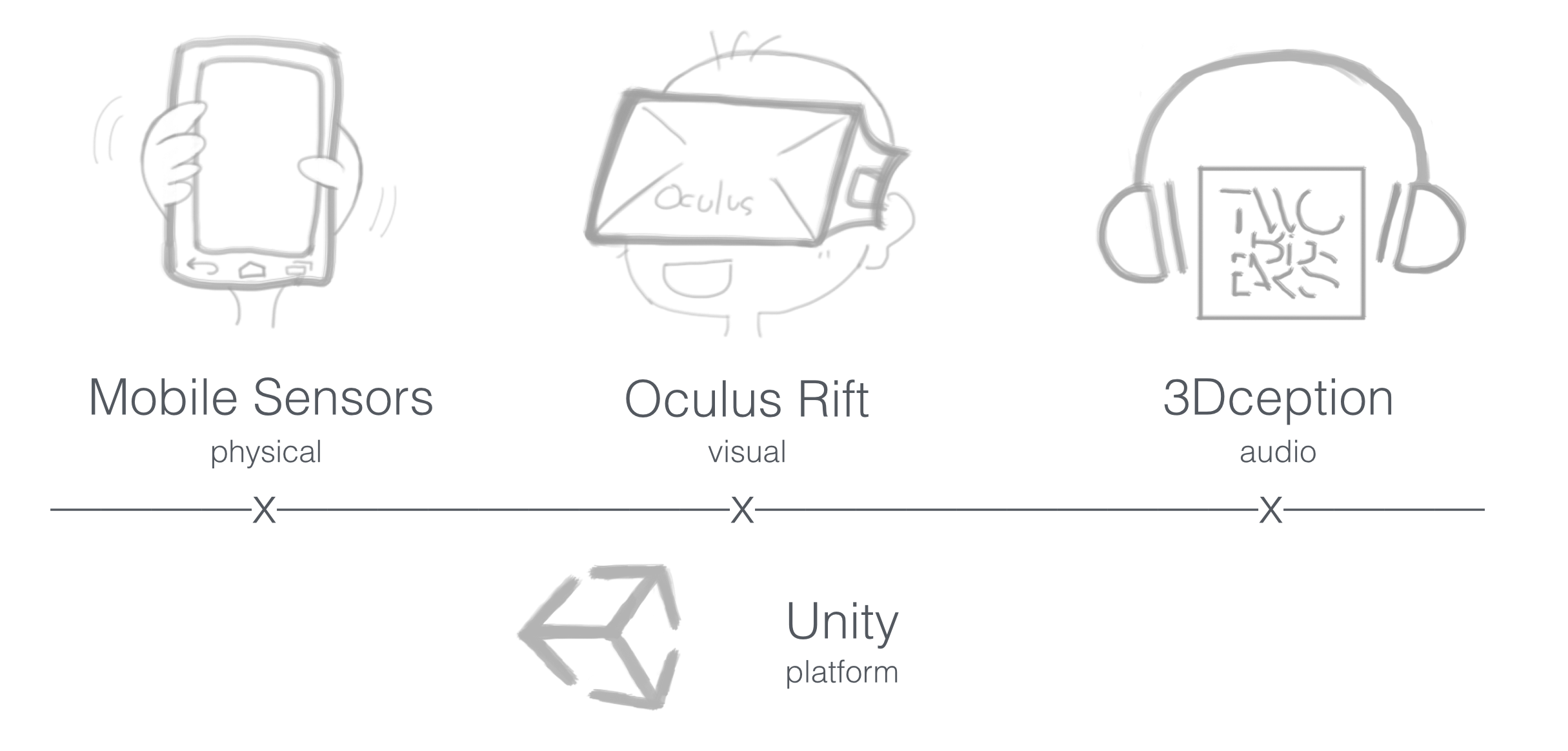

The project given by Two Big Ears for the placement is the exploration of sensor driven interaction on mobile devices using visuals and sound. After two weeks brainstorming, we decided to combine the features of mobile sensors (physical), Oculus Rift (visual) and 3Dception (audio) to provide players interesting experience. We use Unity as the development platform because it works well with all above.

Mobile Sensors

The built-in sensors differ from device to device. For this stage, we narrow down the target device to the Android smart phone, since it has basic sensors needed for the project. There are three broad categories of sensors supported by Android.

Up to now, we mainly work on the usages of motion sensors and position sensors. In prototypes, we infer player gestures and motions like shake, tilt and swing.

In addition, as an important part of smart phone, the touch screen is also a worth studying sensor, because there are many interaction between the user and screen during the daily life using. In prototypes, we map the movement of the player’s finger to the movement or rotation of the avatar in games.

Oculus Rift

Oculus Rift is an equipment which can provide players with immersive virtual reality experience. There are two versions of development kit have been released so far. We use the DK1 and SDK 0.3 for the project.

With the headset, players can look around easily. By understanding the frames and functions supported by DK1 and SDK 0.3, we expand the codes to let players have the abilities of aiming at object and steering the view through the headset.

3Dception

3Dception is a good binaural system makes it possible to hear a sound from any point in space over any pair of headphones in real-time (Two Big Ears), published by Two Big Ears. It helps to enhance the immersive experience. We expect that demos come from the project can show the public the power of 3Dception.

Unity

Unity is a powerful engine for the development of 3D/2D games and applications. With the game objects - cube, light, camera, etc, and their components, such as collider, rigidbody, mesh render and scripts(javascript, C#, boo), it is convenient to build a game world and give the objects desired behaviours. We also use Maya (3D modelling) and Photoshop (graphic design) to assist visual design in order to make the game look better.

How they work together

The job of communication between the mobile and computer is done by Open Sound Control (OSC), it is a protocol for networking sound synthesisers, computers, and other multimedia devices (Wikipedia). In this project, we use it to send the data tracked by mobile sensors to Unity scripts via network in real-time.

Oculus Rift has its own integration package for Unity, it provides developer with a prefab game object attached with left and right cameras, a character controller and OVR scripts. The OVR scripts collect data from the Oculus Rift device and use them to control cameras and the character. We can use this game object as the main player of our game, it follows all the rules of Unity and has features of Oculus Rift as well.

3Dception supports Unity well.

With data all integrated in Unity and Unity’s own functions, we can start making the ideal experience.

Prototypes

Prototype 1

Description

- find the sounds and capture them

Main Experience

- walk around freely by moving the finger on the touch screen

- focus, wipe, and then jump

- multi-levels game world - lifts, drop down

Discussion

- natural /related actions?

- without the ground?

- click

- body direction & head direction

Prototype 2

Description

- strike the cubes into the pockets

Main Experience

- swim in the game space when doing arm pulling with a phone in the hand

- focus, shake, and then strike away the cube

- floating

Discussion

- player has to turn around the body to look behind

Prototype 3

Description

- fly like a superman

Main Experience

- similar to steering - keep going and change the direction by turning around the headset

Discussion

- too sick

- limited in up & down

Demo - Space Pool

Playful!

Enhanced Experience

- swim forward or backward by turning over the phone

- rotate by touch screen joystick

- smoother movement

- 3Dception - sound effects

Other Features

- aiming ray

- feedbacks - hit the wall, goal

- random pockets - lifetime, position, appearance

- tips

Discussion

- two modes: sit & stand

- more accurate gesture recognition

Technique points

Open Sound Control (OSC)

- OVR package

- Functions in Unity

Placement process

The placement is very valuable to me in many ways.

The significant improvement is the ability of problem solving. When given an open project, I have the chance to get involved in every stage of the development as well as the arisen challenges there.

Firstly, how to find out the subject? For the first two weeks, I did case studies and some quick prototypes on my familiar platforms to see the possible ideas, which is summarised as fig.2. During the time, Abesh and Varun kept discussing the ideas with me and inspiring me with some interesting cases. The idea was getting clear after extracting the expanded thoughts.

Summary of Case Studies

Secondly, how to find a solution when having technical difficulties? This is a common problem for a developer, I usually seek help through three main ways: 1)official tutorials or documents; 2)experience shared online; 3)experts around me. The placement has trained me how to combine them skill-fully at the right time. For example, I find it is always good to start with the tutorials and documents offered by the publisher of the tool, because these information are straightforward and less of mistakes. At the same time, never hesitate to ask questions online, because some small points can only be found during the real development and probably worldwide geniuses have discussed them already. Also, one advantage of the teamwork is that I can mention what I am working on to my bosses from time to time, if they have related experience, they would lead me in a proper direction, which could save me much time. However, all of the above would not work well if I do not think actively by myself.

Thirdly, what to do next? The strong intensity training of the placement has lifted me to a more ideal status - keep improving.

Formerly, it is usual for me to stop when I found one method to realise the function. But now, I will keep working on a better solution if there is one. For example, when making the swim movement of the player avatar, I used the force function of Unity at first by adding a rigidbody to it. It has the desired effect, but, one camera ray drops and another one emits intermittently. Then I fixed this by disabling the gravity of the rigidbody (another way is enable both the gravity and the ‘is Kinematic’, which will fail the force function). Although problem solved, I thought it was redundant and there must be a cleverer way to do it. So I had a further look at the comparison between different physics components. It turns out that usually, all humanoid characters are implemented using Character Controllers (Unity Manual). So I removed the rigidbody and wrote my own movement function - a parabolic equation with respect to time and distance. Although such improvement is invisible to players, it is neater in structure and I am happy with it.

Not only the techniques, thanks to Abesh and Varun, their high requirements on the user experience and Jon’s tutorials, I keep exploring new experience for players. For instance, in the first prototype, players can move around by sliding on the phone screen. But it has been pointed out that such interaction is not very natural because it is hard for players to link the movement to their life experience. At the same time, the interaction that players can fly to a destination by wiping has arisen the comparison with the experience of using google street view. Based on these discussions, I made the second prototype, in which players can move around by doing arm-pulling with a phone in their hand and strike the aimed objects away by shaking the phone. Another example is, in the former prototypes, players might be confused with the looking direction and the body direction. So in the later prototypes, I tried finding something that give players better feelings when they sit on chairs, including steering mode of headset, joystick on touch screen and inverting move direction by turing over the phone. Meanwhile, a smoother experience is always the concern.

The placement is also a good practice of picking up a new programming language. This is the first time I use C# for coding. Due to the time limit, instead of beginning with systematic study, I have to quickly enter the world of using C# in Unity to meet the needs of the project. The mission-oriented learning is an efficient way of practicing, it has enhanced my skills of using API flexibly and choosing a better method with comparison thinking. Everyday, my knowledge of the language and the development environment is expanded surely.

Moreover, exploring a new SDK brings me great fun and satisfaction. The SDK for developing Oculus Rift products in Unity is totally a fresh thing. While analyse the structure, I get a deeper understanding of how to built a system and make components work well with each other. This is very helpful for me to make the hardware ‘alive’ by software in the future, for example, a robot.

Three months placement has exposed some of my weakness as well. Although up to now, my knowledge of mathematics and physics supports the work well, I am afraid that it is not enough if I want to do more complicated computing, such as making a gesture library. On the other hand, my efficiency curve does not completely fit the working timetable - it is hard for me to keep being concentrated for eight hours. I am getting used to the timetable, but I think I still prefer the free style - only work when I am efficient. In addition, I am not a good project manager so far. Due to the lack of experience on difficulty analysis, my plan is always behind the work - I have to adjust the plan substantially all the time, which means I am weak at giving my project an ideal time blueprint.

To sum up, the placement has arisen my confidence in facing new challenges. It has trained me how to dig more for a subject and how to work with company as well. Now I feel I get ready to take more research activities.

Special thanks to my two lovely bosses, Varun Nair and Abesh Thakur, and Jon, Steve. I had the best summer ever! ❤